codice completo per addestrare un Variational Autoencoder

Ecco il codice completo per addestrare un Variational Autoencoder (VAE) su MNIST e generare immagini simili ai dati di addestramento:

import numpy as np

import tensorflow as tf

from tensorflow.keras import layers, Model

import matplotlib.pyplot as plt

# Definizione delle dimensioni

input_dim = (28, 28, 1)

latent_dim = 2

# Encoder

inputs = layers.Input(shape=input_dim)

x = layers.Conv2D(32, 3, activation='relu', strides=2, padding='same')(inputs)

x = layers.Conv2D(64, 3, activation='relu', strides=2, padding='same')(x)

x = layers.Flatten()(x)

x = layers.Dense(16, activation='relu')(x)

z_mean = layers.Dense(latent_dim)(x)

z_log_var = layers.Dense(latent_dim)(x)

# Funzione di campionamento

def sampling(args):

z_mean, z_log_var = args

batch = tf.shape(z_mean)[0]

dim = tf.shape(z_mean)[1]

epsilon = tf.keras.backend.random_normal(shape=(batch, dim))

return z_mean + tf.exp(0.5 * z_log_var) * epsilon

z = layers.Lambda(sampling)([z_mean, z_log_var])

# Decoder

decoder_inputs = layers.Input(shape=(latent_dim,))

x = layers.Dense(7 * 7 * 64, activation='relu')(decoder_inputs)

x = layers.Reshape((7, 7, 64))(x)

x = layers.Conv2DTranspose(64, 3, activation='relu', strides=2, padding='same')(x)

x = layers.Conv2DTranspose(32, 3, activation='relu', strides=2, padding='same')(x)

outputs = layers.Conv2DTranspose(1, 3, activation='sigmoid', padding='same')(x)

# Costruzione dei modelli

encoder = Model(inputs, [z_mean, z_log_var, z], name='encoder')

decoder = Model(decoder_inputs, outputs, name='decoder')

vae_outputs = decoder(encoder(inputs)[2])

vae = Model(inputs, vae_outputs, name='vae')

# Funzione di costo

reconstruction_loss = tf.keras.losses.binary_crossentropy(tf.keras.backend.flatten(inputs), tf.keras.backend.flatten(vae_outputs))

reconstruction_loss *= input_dim[0] * input_dim[1]

kl_loss = 1 + z_log_var - tf.square(z_mean) - tf.exp(z_log_var)

kl_loss = tf.reduce_sum(kl_loss, axis=-1)

kl_loss *= -0.5

vae_loss = tf.reduce_mean(reconstruction_loss + kl_loss)

# Compilazione del modello

vae.add_loss(vae_loss)

vae.compile(optimizer='adam')

# Caricamento e preprocessamento dei dati

from tensorflow.keras.datasets import mnist

(x_train, _), (x_test, _) = mnist.load_data()

x_train = np.expand_dims(x_train, -1).astype("float32") / 255

x_test = np.expand_dims(x_test, -1).astype("float32") / 255

# Addestramento del VAE

vae.fit(x_train, epochs=50, batch_size=128, validation_data=(x_test, None))

# Generazione di nuove immagini

def plot_generated_images(decoded_imgs, n=10):

plt.figure(figsize=(20, 4))

for i in range(n):

ax = plt.subplot(2, n, i + 1)

plt.imshow(decoded_imgs[i].reshape(28, 28), cmap='gray')

plt.axis('off')

plt.show()

# Campiona dallo spazio latente

n_samples = 10

random_latent_vectors = np.random.normal(size=(n_samples, latent_dim))

# Genera nuove immagini

generated_images = decoder.predict(random_latent_vectors)

# Visualizza le immagini generate

plot_generated_images(generated_images, n_samples)

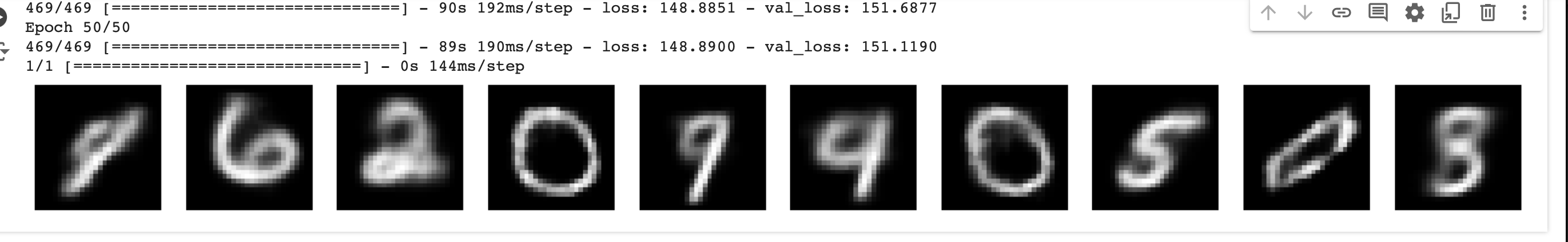

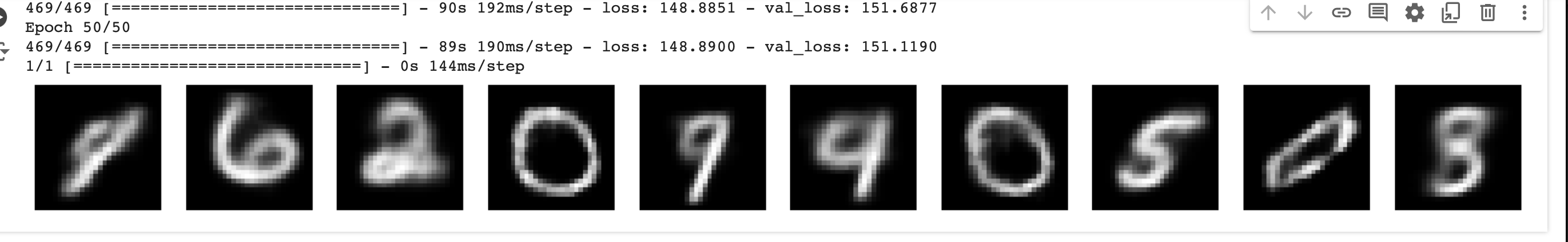

Risposta

Ecco le immagini generate